#Azure data studio redshift full

Below is an image of the webpage, but I encourage you to visit their website and read the full report of the study.įor the research they compared Azure SQL Data Warehouse to Amazon Redshift, Google BigQuery and Snowflake. On Microsoft’s Azure SQL Data Warehouse product page, they compare their product’s performance and price against others based on a study by GigaOm. If not provided, the service generates it automatically.Interested in learning how Azure SQL Data Warehouse compares to the competition? Today I’d like to share some research about that comparison in performance and price. Indicate the S3 bucket to store the interim data. Refers to an Amazon S3 to-be-used as an interim store by specifying a linked service name of "AmazonS3" type. Property group when using Amazon Redshift UNLOAD. No (if "tableName" in dataset is specified) The type property of the copy activity source must be set to: AmazonRedshiftSource The following properties are supported in the copy activity source section: Property To copy data from Amazon Redshift, set the source type in the copy activity to AmazonRedshiftSource. This section provides a list of properties supported by Amazon Redshift source.

Copy activity propertiesįor a full list of sections and properties available for defining activities, see the Pipelines article. If you were using RelationalTable typed dataset, it is still supported as-is, while you are suggested to use the new one going forward. This property is supported for backward compatibility. No (if "query" in activity source is specified) The type property of the dataset must be set to: AmazonRedshiftTable To copy data from Amazon Redshift, the following properties are supported: Property This section provides a list of properties supported by Amazon Redshift dataset. If not specified, it uses the default Azure Integration Runtime.įor a full list of sections and properties available for defining datasets, see the datasets article. You can use Azure Integration Runtime or Self-hosted Integration Runtime (if your data store is located in private network). The Integration Runtime to be used to connect to the data store. Mark this field as a SecureString to store it securely, or reference a secret stored in Azure Key Vault. Name of user who has access to the database. The number of the TCP port that the Amazon Redshift server uses to listen for client connections.

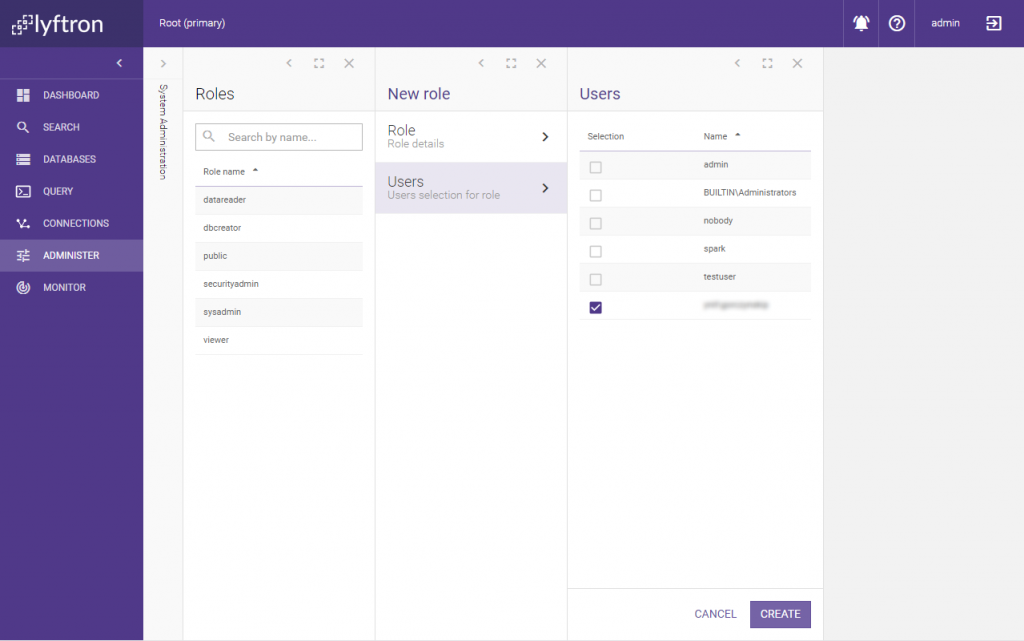

IP address or host name of the Amazon Redshift server. The type property must be set to: AmazonRedshift The following properties are supported for Amazon Redshift linked service: Property The following sections provide details about properties that are used to define Data Factory entities specific to Amazon Redshift connector. Search for Amazon and select the Amazon Redshift connector.Ĭonfigure the service details, test the connection, and create the new linked service.

Use the following steps to create a linked service to Amazon Redshift in the Azure portal UI.īrowse to the Manage tab in your Azure Data Factory or Synapse workspace and select Linked Services, then click New: To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:Ĭreate a linked service to Amazon Redshift using UI If you are copying data to an Azure data store, see Azure Data Center IP Ranges for the Compute IP address and SQL ranges used by the Azure data centers.See Authorize access to the cluster for instructions. If you are copying data to an on-premises data store using Self-hosted Integration Runtime, grant Integration Runtime (use IP address of the machine) the access to Amazon Redshift cluster.See Use UNLOAD to copy data from Amazon Redshift section for details. To achieve the best performance when copying large amounts of data from Redshift, consider using the built-in Redshift UNLOAD through Amazon S3.

0 kommentar(er)

0 kommentar(er)